Note: This is the last post in the HyFlex Learning Community series of posts describing a set of custom GPTS built to support designers and faculty with their HyFlex design efforts. These GPTs are built for ChatGPT, but the concepts can be applied to any GenAI platform through thoughtful prompting. For more information on specific GPT instructions (the prompt language), contact hyflexlearning@gmail.com.

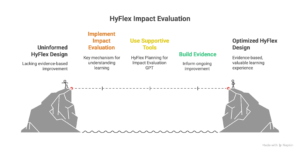

Evaluating the impact of a HyFlex course is often the last step faculty and designers think about, but it is one of the most important aspects of design that should not be an afterthought. Planning for impact evaluation should be part of the initial design process, at least for a first attempt. Later, evaluation questions will arise that may need new methods of evaluation. Impact evaluation helps instructors, programs, and institutions understand whether HyFlex is achieving its goals: supporting student choice, improving access, enabling equitable participation across modalities, and sustaining learning outcomes. Yet, compared to more traditional formats, HyFlex raises new questions. How do we assess student learning and engagement across different participation paths? What indicators matter most when evaluating the effectiveness of a HyFlex offering? What counts as evidence of “success” in such a flexible design?

To help faculty and designers answer these questions more confidently, the HyFlex Planning for Impact Evaluation GPT provides guided support for clarifying evaluation goals, selecting meaningful indicators, and planning data collection approaches aligned with the realities of HyFlex teaching.

When a Good Idea Needs Good Evidence (a story of need for design support)

Professor Elena Martinez had been teaching her undergraduate Research Methods course in a HyFlex format for three semesters. She liked the rhythm of it now; the interplay between the students who came to the room, those who joined online, and those who participated asynchronously later in the week. Her students used the flexibility well. Many were working, caregiving, or commuting long distances. A few simply needed a choice on any given day. Elena felt proud that her course made space for their realities without compromising learning.

During a routine department meeting, her chair asked a question that caught her off guard.

“I’m glad your students seem to appreciate the flexibility,” she said, “but I’m still not sure why we need HyFlex for this class. Couldn’t a well-designed online or traditional format meet the same needs with less complexity? Before more faculty jump into HyFlex, we’ll need clearer evidence that it actually makes a positive difference.”

She wasn’t hostile. Just practical. Concerned about workload, consistency, and the possibility that HyFlex might become a trend without substance. But the question landed heavily.

Elena knew HyFlex was making a difference. She saw increased participation from students who previously struggled to attend regularly. She saw stronger project submissions from those who used the mode that fit their week’s situation. She heard more than once, in advising meetings and end-of-semester reflections, how much students appreciated having real agency in how (when, where) they learned.

But when her chair asked for evidence, she froze.

She had end-of-semester comments and a few powerful anecdotes. But no structured data. No clear indicators. No analysis of patterns across participation modes. Without that, she couldn’t convincingly defend the design decisions she believed in so strongly.

Walking back to her office that afternoon, Elena realized she needed more than good intentions. She needed a plan.

A clear set of questions. A small but meaningful set of indicators. A practical way to compare experiences and outcomes across modalities. Not a research study, just a thoughtful, intentional approach to gathering and interpreting evidence.

That’s when she turned to the HyFlex Planning for Impact Evaluation GPT.

What the HyFlex Planning for Impact Evaluation GPT Does

This custom GPT is designed to help instructors move beyond informal impressions of “how it went” and toward a structured, formative evaluation plan tailored to HyFlex. It supports users in:

-

Identifying appropriate evaluation questions aligned with the course and program goals

-

Choosing indicators that reflect learning, engagement, access, equity, and modality-related experiences

-

Planning data collection approaches that work across synchronous, asynchronous, online, and in-person contexts

-

Anticipating where modality choice may shape the interpretation of results

-

Producing a short, usable evaluation plan to guide design revisions or departmental reporting

The tool can generate evaluation frameworks, suggest instruments, and help users interpret potential findings through a HyFlex lens. It does not replace formal institutional research processes, but it helps faculty create a meaningful, course-level plan grounded in clear questions and flexible evidence sources.

Where Faculty and Designers Often Struggle

In workshops and consultations, faculty frequently express uncertainty about evaluating HyFlex courses because:

-

Modalities differ, so evidence differs. What counts as engagement or participation may look very different across modes.

-

Student choice complicates comparisons. Instructors may wonder whether outcomes should be compared across modalities or aggregated.

-

Institutional expectations are unclear. Many colleges want data on HyFlex effectiveness, but offer limited guidance on what that means.

-

Workload pressures obscure evaluation. Faculty often feel too stretched to design a structured evaluation process without support.

The Impact Evaluation GPT helps instructors navigate these tensions by grounding evaluation planning in actionable questions and pragmatic data strategies.

Jordan’s Design Journey Continues …

Jordan, a colleague preparing to offer a redesigned HyFlex course, wants to know more than whether students “liked” the experience. She wants to understand how students used the different modes, whether learning outcomes were comparable, and what patterns of engagement emerged across the term.

Using the Impact Evaluation GPT, Jordan begins by describing her goals for the course. The GPT helps her articulate three evaluation questions: one focused on learning outcomes, one on engagement across participation modes, and one on student perceptions of access and support. It then proposes a small set of indicators and low-effort data sources she can use, such as LMS analytics, short pulse surveys, and reflective check-ins at key points in the term.

By the end of the conversation, Jordan has a manageable evaluation plan that aligns with her teaching goals and provides data she can use to refine next semester’s offering.

A Taste of What the Research Tells Us About Evaluating HyFlex Courses

A growing body of HyFlex research highlights the importance and complexity of evaluating impact across multiple dimensions. Several themes from the literature can guide instructors as they plan effective evaluation strategies. (Watch for a blog post and HLC Gathering (webinar) with a more thorough summary of recent research in HyFlex in early 2026).

1. Learning outcomes across modalities are often comparable, but nuanced.

Studies consistently report that learning outcomes in HyFlex tend to be similar across modalities. For example, Lakhal et al. (2014) found no significant differences in satisfaction, multiple-choice test scores, or written exam scores across students’ chosen modes, though differences appeared in continuous assessment scores.

Similarly, Kyei-Blankson and Godwyll (2010) reported that students’ expectations and needs were largely met, and instructors perceived performance to be comparable to face-to-face offerings.

These findings suggest that faculty should examine outcomes across modalities but avoid assuming inherent performance gaps.

2. Engagement patterns matter and are influenced by psychological and contextual factors.

HyFlex research highlights the importance of motivation, self-efficacy, community of inquiry presences (social, teacher, and content), and perceived support. Xu et al. (2024) found that self-efficacy, motivation, and perceived CoI presences directly predicted engagement, with community of inquiry factors mediating several relationships.

Student-reported engagement benefits of HyFlex have also been documented. Adeel and colleagues (2023) noted that over 86 percent of students found HyFlex features helpful for accessing and engaging with content.

This means evaluation plans should look beyond attendance counts and include indicators of affective engagement, perceived support, and sense of presence.

3. Equity and student choice shape impact.

HyFlex offers students autonomy in managing their learning, but equity considerations vary. Mahande et al. (2024) demonstrated that modality preferences were linked to learning equity, with student cognitive styles influencing the ways they used HyFlex options.

Evaluation plans should therefore attend to patterns of modality use, student reasons for choosing different modes, and how well instructional supports function across those modes.

4. Faculty experiences and institutional readiness affect HyFlex quality.

Faculty report meaningful challenges when teaching HyFlex. Li et al. (2023) found that academics perceived lower interaction and higher workload in hybrid/HyFlex settings, despite strong technical readiness.

At the institutional level, Zamora’s research on the Comprehensive Institutional Model (2025) highlights the importance of technology investment, communication loops, and stakeholder alignment in improving teaching effectiveness and sustaining HyFlex programs.

Evaluating HyFlex impact is therefore not only about student outcomes but also about instructor workload, support, and systemic infrastructure.

5. Process evaluations reveal students’ perceived benefits and areas for improvement.

Wong’s (2022) post-lecture evaluations of a HyFlex course found generally positive perceptions related to well-being, competence, and readiness to engage. These kinds of evaluations complement outcome measures and help educators identify early opportunities to adjust course design.

How to Use the Impact Evaluation GPT in Your Workflow

Here are three practical ways to integrate the HyFlex Planning for Impact Evaluation GPT into your HyFlex design or redesign cycle:

-

Early in the planning process: Use the GPT to clarify your evaluation questions and align them with course goals and HyFlex pillars.

-

Mid-semester: Revisit the GPT with a short reflection on how the course is unfolding; generate a quick pulse survey or observation checklist.

-

Post-course review: Use the GPT to interpret your collected data, explore modality-specific insights, and create a brief summary for your department or future iterations.

The goal is not to produce a large research study but to build a sustainable practice of inquiry that strengthens HyFlex offerings over time. And if you have the opportunity to share what you’ve earned with others, please do!

As a Final Thought, Consider This …

Impact evaluation is not an add-on to HyFlex; it is a key mechanism for understanding how learner choice, flexibility, and participation across multiple modalities shape the learning experience. With supportive tools like the HyFlex Planning for Impact Evaluation GPT, faculty can approach this stage with clarity and confidence, building evidence that informs ongoing improvement and demonstrates the value of HyFlex design.

Try the GPT: HyFlex Planning for Impact Evaluation

https://chatgpt.com/g/g-67eb031b8ba88191bf5d2b4952c00b1c-hyflex-planning-for-impact-evaluation

See an Example Chat: Example Conversation

https://chatgpt.com/share/67eb0849-994c-800b-a52d-64fb86113817

References

Adeel, Z., Mladjenovic, S. M., Smith, S. J., Sahi, P., Dhand, A., Williams-Habibi, S., Brown, K., & Moisse, K. (2023). Student engagement tracks with success in-person and online in a Hybrid-Flexible course. The Canadian Journal for the Scholarship of Teaching and Learning, 14(2). https://doi.org/10.5206/cjsotlrcacea.2023.2.14482

Kyei-Blankson, L., & Godwyll, F. (2010). An examination of learning outcomes in HyFlex learning environments. In J. Sanchez & K. Zhang (Eds.), Proceedings of E-Learn 2010—World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education (pp. 532–535). Association for the Advancement of Computing in Education. https://www.learntechlib.org/p/35598/

Lakhal, S., Khechine, H., & Pascot, D. (2014). Academic students’ satisfaction and learning outcomes in a HyFlex course: Do delivery modes matter? In T. Bastiaens (Ed.), Proceedings of World Conference on E-Learning (pp. 1075–1083). Association for the Advancement of Computing in Education. https://www.learntechlib.org/p/148994/

Li, K. C., Wong, B. T. M., Kwan, R., Chan, H. T., Wu, M. M. F., & Cheung, S. K. S. (2023). Evaluation of hybrid learning and teaching practices: The perspective of academics. Sustainability, 15(8), 6780. https://www.mdpi.com/2071-1050/15/8/6780

Mahande, R. D., Setialaksana, W., Abdal, N. M., & Lamada, M. (2024). Exploring HyFlex learning modality through adaption-innovation theory for student learning equity. Online Journal of Communication and Media Technologies, 14(1), e202410. https://www.ojcmt.net/article/exploring-hyflex-learning-modality-through-adaption-innovation-theory-for-student-learning-equity-14170

Savignano, M., & Holbrook, J. (2023). Increasing community in a HyFlex class during COVID-19. Journal of Educational Technology Systems, 52(2), 187–202. https://journals.sagepub.com/doi/10.1177/00472395231205212

Wong, S. S. (2022). HyFlex teaching process evaluation during COVID pandemic for a baccalaureate core course about issues in nutrition & health. Journal of Nutrition Education and Behavior, 54(7). https://linkinghub.elsevier.com/retrieve/pii/S1499404622002846

Xu, Y., Abdul Razak, R., & Halili, S. H. (2024). Factors affecting learner engagement in HyFlex learning environments. International Journal of Evaluation and Research in Education, 13(5), 3164–3178. https://ijere.iaescore.com/index.php/IJERE/article/view/28998

Yin, Z., Yang, H. H., & Zhu, S. (2025). Examining students’ acceptance of large-scale HyFlex courses: An empirical study. British Journal of Educational Technology, 56(1), 42–60. https://bera-journals.onlinelibrary.wiley.com/doi/10.1111/bjet.13477

Zamora, A. A. (2025). Overview of preliminary study findings: Comprehensive Institutional Model (CIM) in the post-COVID-19 HyFlex environment. International Journal of Advanced Corporate Learning, 18(2), 51–59. https://online-journals.org/index.php/i-jac/article/view/52573

Author

-

View all posts

View all postsDr. Brian Beatty is Professor of Instructional Design and Technology in the Department of Equity, Leadership Studies and Instructional Technologies at San Francisco State University. At SFSU, Dr. Beatty pioneered the development and evaluation of the HyFlex course design model for blended learning environments, implementing a “student-directed-hybrid” approach to better support student learning.